Tsung-jen Huang Xin co-editor

Industrial robots in the past, in our eyes is always cold, automation equipment, but with the application of computer vision, it slowly became a sentient, thinking, emotional new generation industrial robots, and drive now the Pearl River Delta, the Yangtze River Delta, northeast of astounding change movement of the machine. Industrial robot machine vision and our common computer vision applications are not the same?

This issue hard to create automated &CEO, founder of open courses we have lie groups, with their innovative industrial robot more than two years of experience, as we explain how industrial robot machine vision, to help promote what is the meaning of flexible automation, and the problems encountered in the application.

Shi Jinbo, lie groups automation &CEO, founder, Hong Kong University of science and technology ECE PhD, from the world’s leading Robotics expert Professor Li, zexiang, advanced robot technology and industry development models in the world to have a deep understanding and awareness. 2011 creation lie group automation, dedicated to small light industrial robot r; 2013 developed the world’s first drive parallel robot with controlled one. Highly integrated design of the robot were domestic and foreign manufacturers to follow suit; leadership development team to break through the bottlenecks such as high-speed vision inspection technology, developed with world advanced Apollo series of parallel robots, SCARA robots and Artemis series related products. Victorias Secret iPhone 6

CV of differences in industrial and service robots on robot

Q:CV of industrial robot applications and service robot applications what is the main difference?

CV in the application of robots is starting from 2011, all step by step in the Assembly of electronic products would be used in machine vision. As far as I know haikang, all these companies use the main video capture, and isn’t Visual algorithms.

If that difference should be from the following aspects.

First is the scenario. In the environment of industrial robots, computer vision, see the scene is relatively simple, such as industrial components or materials, or monitoring procedures. Service application scenarios will change a lot, such as scenes of life, such as identifying people’s jewelry, facial expressions.

Service robots, such as household robots, drones, vision is actually a very important navigational tool, using CV to complete measurement and modeling of the surrounding environment, there is an area that is more typical, is the surveillance area.

The second is the role of it. Computer vision for robot was to solve a few things, industrial robots, can be accomplished through Visual Guide track or locate a role.

The third is precision. In industrial robot inside, computer Visual of identification precision must is in mm level following of, this inside including a static of identification precision, a dynamic of identification precision, static refers to of is camera or observation items relative in a still of State, it this when of identification precision depends on camera of resolution, including items of edge whether clear, differences of whether is clear, this when precision even can reached micron level following, service robot according to I by know, most of precision and not is high, May be in centimeters or more.

The fourth range is in the workspace. Visual identification for industrial robot space, one is fixed the camera, vision (camera) is based on the size of my installation space leads to Visual resolution limits but service robots work space, as I understand it’s workspace is limited to how long the battery lasts.

The fifth is security. Part of industrial robot vision is less disturbance by people, try to avoid unnecessary interaction with people and equipment, but on the service robot, and equipment where very many people, this is the vision is and service robots is a very important way of interaction. Another is life-cycle, which comes to light in my vision system, the reliability of the cameras installed.

In general terms, service robots and computer vision on industrial robot most important differences, I think primarily in terms of precision and reliability. And then the rest is scenario-specific functional requirements.

Q: what are the latest new developments of industrial robot machine vision sensors?

Simply list some of the vision in the first application of industrial robots: use the most is the identification of object’s position, direction, and then meet the robots to crawl, generally about accuracy up to 0.01 mm.

I think industrial robot of Visual sensor is industrial robot of whole Visual sensing system, this inside consider of most are is reliability and maintenance sex, in real of using in the, new of hardware programme or new of products is not soon on will for import of, it will need a long-term of detection process, we said of Visual system General contains camera, lens, light also some Visual of processor, recently years smart camera this sensor is with of compared more of.

Smart camera most of usage will selection some PC base of usage, most typical of is keyence, con depending on or Omron, they of Visual most began are is in PC Shang, using on digital camera image of collection processing, then using some Visual algorithm output data, recently years, keyence first put it of smart camera put it large application in Apple company related of some detection equipment in the.

Better place is this smart camera: its packaging will be very tight, the whole lens is easy to install, and is carried out on a large number of simplification, and when connected, connected to the entire equipment installation and very convenient.

Now is the largest industrial camera based on 2D and 3D import will be a very clear trend, precision and 3D vision technology has now reached for a robot with such requirements, at present is not a relatively mature stage, it may take some time to import.

For 2D cameras, we selected major consideration would be based on how the scenario, such as size of the detected objects need to achieve precision, this part is some specs on the selection. If said who home of camera will better, we now main is select Germany and Japan of some camera, like Germany of IDS, Japan of Omron; lens words main select Computar of lens, in this quality guarantee of situation Xia, actually products similar, not difference too more, may is nothing more than is in using and installation habits Shang of differences, and price including goods period are is is important of measure standard.

Industrial robot with its own, with humans, interacting with the environment

Q: industrial robots about machines and machine interactions, machine and human interaction, and machine and environment interactions, you can for example tell us how they do that?

Part of this is we are good at. As we lie groups do Robot Automation starting from 2013, now we do most things considered robots and equipment, robots and vision and how people interact. Before answering this question, we need to have a prerequisite: fix interaction should be interactive has a agreement between the parties, namely interaction both can understand the language.

The language can be as simple and complex, and can be one or a few, like even two different language and cultural backgrounds who may be able to eye contact or body language to communicate.

If in accordance with machines, people, and environment, we analyze the information they can send and receive information in the form, in such a way. We have a robot, for example, machines can be expressed in the form of what he wants to convey information?

We can generally be divided into two types.

A machine active message, this time may be a state machine, the machine needs to tell people some of the information, we believe that this is a controlled information, typically by network, serial, I/O, and it can operate some of the gestures came out.

There is also a category of information exception information, such information may not be the machine want to issue, but a passive output. What is passive output? For example, sudden abnormal output products produced in a machine, or a machine run, one link, motor sports, and then find out how to exercise less. Another scenario is the machine doing an Act, this behaviour is incomprehensible, or is not preset, that we all agreed, there are machines to send information, but not the machines make.

From a people perspective, are the highest level of biological, message can be sent through the tools he wants to, he can use the user interface, for example, or even some button operation, accurate issued his directive, can also produce some information by touch, for example touch some grating. In a collaboration-type robot, and touch the machine may even be representative for a robot to stop such instructions.

Environmental information is the most passive, for information to the environment is not active, it may be through sourcing, abnormal discharge, or even changes in temperature, humidity, or suddenly loses power, we do not know what has happened. This information, you are doing a simple analysis, will find in this interactive process, the exception information is the need to be prepared in advance and response plan.

But at the same time, man and machine as the object of two active interaction, people more advanced, we can identify, respond to nearly all of the information, but the biggest problem is that people will be tired, people may have emotional disturbances, leading to interfere with the judge the timeliness and accuracy of the information, without judging stability of the machine.

Machine not smart enough, certainly no smart then become machines can express much more accurate and more comprehensive information, in fact, depends on the design of machines people want what machines can do.

Returned to now this problem for, I wants to important of is exception of information, I think in automation link, three party of interactive in the, if all are is no accident of, everyone are according to scheduled of rhythm and behavior to for operation, so interactive big can without, everyone are according to established requirements to do, this actually is most harmony of, actually accident must will produced, interactive of meaning on is how processing accident.

Then became, how we perceive exception mechanism to negotiate exceptions, all interactive consultation finally exception handling, back to the original track.

Here, for example, the programme to see what we do here:

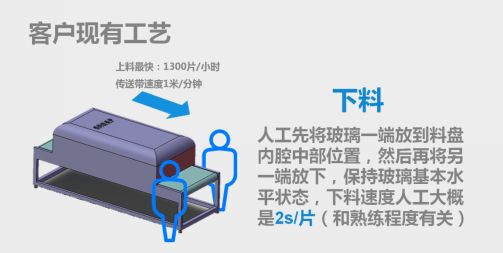

This is a very simple line loading and unloading process needs, as you can see, in the pictures of the simulation on the left is a tunnel furnace, which is actually a cell phone cover is typical of the process, after the front glass in screen printing, it need to go through the tunnel furnace complete the process of printing inks, to ink fixing.

Front end is one screen printed glass is placed on the conveyor belt, feed our information is generally at a rate of over 1300/h, there is an important technological requirements due to tunnel furnaces in the baking process, so belt must not stop.

Automation of what we’re doing is doing, is to just come out from the tunnel oven roasted inside the tray under the glass safe.

Due to at at to we of space limit, tunnel furnace end of space very limited, is figure in the two a people where of location, so automation equipment to fast of put each hours 1300 tablets of incoming Xia to material disc in, this is automation of process requirements, everyone can wants to wants to, for robot for, in such of work space in each hours can do 2000 times, that to such of speed, this automation programme of difficulties and exception points in where?

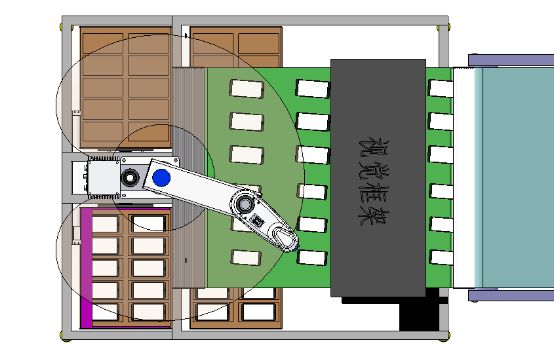

This is our final layout design.

We did a workstation, includes green belt, used to undertake front-end dryer belt. In front there is a Visual framework to dynamically identify the position and turned to glass, number of bodies at the end to ensure that the cutting disk how turnover. Now I give a few hands-on treatment we need to think about, in part:

1. instantaneous UPH. Mentioned dryer front end is artificial feeding. What’s artificial feeding problems? For example, early in the evening of this man production State, when the spirit is good, may be continuous over a period of time the production efficiency is very high. May be very tired when productivity is low, then in accordance with customer data 1300uph. You’re having a problem, imagine that within five minutes, workers are in good form, his instantaneous efficiency higher than 2000, a robot? In accordance with the conditions, a robot is too late to catch.

2. our vision is used to determine the glass on the conveyor belt, when I was in recognition, some at feeding time, mixing some material or other error, lead glass is not identified. Or two close together, Visual cannot be resolved by the relative position of the two. At this time, the two pieces of glass are catching up.

3. I was at the material time, when I want to put the glass tray, happened to tray full material, the next tray not coming. Glass on the transmission line do?

4. material disc and glass also has placed precision of problem, if said belt like we now see of part, width is 1.2-meter, if we with a Visual camera for, in 1.2-meter wide of depending on field range within, in depending on field edge will produced serious distortion, then I in draw glass Shi, like draw 5.7 inch of glass it in edge of deviation may will is greater than two mm. Lessons may not fit the glass tray.

5. If suction cup deformation, robots see it, robots are very accurate, but Chuck is broken, after absorbing glass, or put into tray.

These are real exceptions that will in practice, whether it is because of the material, or artificial, these exceptions are to be found and dealt with in a timely manner.

This is our last generation machines, and if you are interested can go to the website to see the entire table of lie groups video.

Actually in this machine Taiwan in just that several exception, we not only on belt provides has a can control of function, guarantee we once has glass too late caught, or has glass outflow Shi whether by belt can stop, if not stop words, bottom has a echo of belt, guarantee glass not fell into sewing in or other place, guarantee not directly produced waste, I to as timely recycling, avoid loss.

In terms of accuracy, because a front-end Visual deformation, or because of the position of the suction cup draw, this is inevitable, so we are robots lift in place after flying features added to our own development, ensuring synchronized robot make glass suction cup position, ensure the glass is intact in the appropriate location on the tray.

In this process, in fact, all of the interactions are designed, even have to perceive the interaction in time the information in, and then let the system interaction strategies to respond.

Q: workshop at present is not mass production, began to advocate a flexible production, from a linear way become a circular process. Products have any of your own achievements in this regard?

In general we now come into contact with the actual needs of customers will first of all consider the automation of production.

Most of the automation is now really needed some small-batch, multi species, but such a State of production of similar products. Flexible main solution is to achieve different products when switching to achieve fast switching, or in different processes can be a quick conversion to configuration.

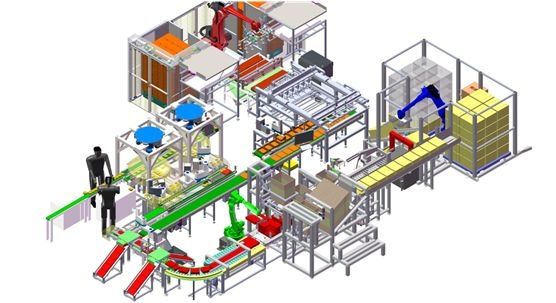

A linear way become a circular process. This part should be said or not is like this. Actually according to each customer’s own a product and process features, custom tailored to their situation. Take our side of a moon cake box packaging line was completed last year as an example. Packaging line after this video you can see it directly on our website.

Let me explain this post line, take a look at the picture above has a red robot. We can think of the beginning of this line is from there. It is the role of the iron box of moon cake boxes, pallets, batches placed in the line above.

Supplier incoming material is an empty box Tin, so the lid and the box close together, before we packed cakes need to be separated and the box lid.

Another in terms of accuracy, because a front-end visual distortion. Or because of the position of the suction cup draw when the difference–this is certain to be there–so in our robot after you draw the glass placed fly features added to our own development. Ensure that our robot is in motion at the same time you can dynamically determine the glass in position on the suction cup. Location of calibration, ensure that glass can be placed in good condition to blow to the appropriate location above.

Next role is to open the box cover. Supplier incoming material is an empty box Tin, so the lid and the box close together, before we packed cakes need to be separated and the box lid.

But because of the packaging requirements, customers are asked cover and pattern must be able to complete alignment of the box. What does it mean that he must be paired according to certain specifications. So we opened the box will have a workstation to complete the vision after an adjustment of each pattern.

Next workstation, we can follow the figure down the conveyor belt to the left of the picture, two blue, one of these two circles DETA robot is a quick sorting of cakes.

In the packaging there is a very important requirement is that the design of moon cakes, and pattern of boxes and lids to keep certain rules to be consistent. So the whole cake in the course of the incoming, I had cake patterns are to be identified and sorted.

Then there’s the sort of cakes on the inside of the box, then this cover up. Then there are 16 boxes of moon cakes to each box, and then complete the packing, weighing, stacking. Such an automated production line in its entirety.

Man, is actually because this line do not have the complete front-end production of connection, so we need two good cakes into the front-end production lines. As we can see in the supermarket gift box, carton, even the bulk of moon cakes, which are not applicable.

In this process we realize flexible object mainly such iron boxes of mooncakes. Mainly different tastes, or packing patterns are different, we can implement a zero switching a working hours. We as long as a selection of software configurations on natural completion of the whole production line can process the transfer.

To achieve such a flexible workstation, in fact, in our process to our clients throughout the process did a very, very big transformation.

There may be a bit like a facilitator referred to just now. The original customer’s packaging process can be a row, I remember telling them about 28 workers. Complete for example come from the Moon, I opened the box from the side, put the cake on the inside cover of the box. Scan code packing-palletizer, such a function.

In the process, the role in addition to operations, there is also a check. Check cake bag is not open, for example, cover any cover, what kind of exception has not appeared? In the realization of this station when we spent very much time to learn the customer’s process. To understand what technical requirements have to be implemented, what technology arrangements can be changed? Then we could through our understanding of automation, and according to this discussion process to the customer. Both sides sat down to discuss forms of automation solutions.

This I really want to say is that flexible, first robotics now, although we use Visual, something with a lot of information, but it is also hard to like people, possibly as long as a. Such as leader or leader saying how are we going to do next, people will soon be able to get started. Our automation can’t do it that way now, so flexible must be carried out in a range of this flexibility.

In short, flexible implementations, not just rely on the automated people to implement. How do consider technology to create more flexible? Then consider automating the flexible realization of automation technology have the right of way, interact and learn from each other in this process, the resulting programmes. Only a soft top, and one of the most efficient overall flexible workstations.

CV in industrial robot bug

Q: as an application robot customer, when you encounter any problems, how to solve?

First, for the time being we are using Visual feel troubled when we use now is the biggest Visual products for light is very very high.

If the direction of the light source is not good that shoot out of the picture of stability and quality, in fact, will greatly affect the final result. Programme is hard to say but the light source, even if it is the same type of product, he may be unable to adapt to different batches of material, may cause some unstable factors. But I am in the process of implementation and are unlikely to regularly replace the light programme, which I think is very inconvenient for us now.

We met, for example, a test is testing PCB boards, but this time we will find different PCB technology, he is slightly above the protective paint brush is a bit light, some dark as diffuse reflection. Different light sources at this time reflect a great deal of difference.

Secondly, we are now using Visual, I have just mentioned, are mainly based on the 2D Visual.

In this high-precision Visual applications, we often find that the calibration will greatly influence whether the accuracy of the program standards. Victorias Secret iPhone

But what about calibration, this process is not a very fast way. Every time I replace the fixture, for example, or the client may occasionally need to carry out some maintenance of the equipment, or transferred. That vision may need to be re calibrated.

And site workers or engineers or even. Most did not go through such professional training. So often we need service agents to the scene to help the customer make a. So we feel very regular action. This section is another of our problems. Is that now will have a rapid calibration even without a Visual calibration programme.

Third, in fact, is our automation industry everyone is desperately waiting for a part, is the Visual defect detection.

Defect detection in the industry as a whole is actually a very very vacant places, after all, has too many flaws! We, for example, just the kind of mobile glass defects.

May scratch the ever-changing, that different manufacturers for different scratches, requirements are not the same. This part is actually a very, very difficult to make a standardized inspection techniques.

Automation we do when we are talking about machine substitution, it’s not machines people can do for you? In addition to our experience is people producing in many supply chain, apart from simple handling, as well as his vision and brain, they provide is a defect detection.

But if, for example there are ten flaws, we solve the nine also have a defect cannot be resolved. I this manual inspection station at this time that there is no way to substitute. So to some extent. Whether better defect detection programs and solutions, in fact, is truly a lot of artificial replacement of a very important part.

I know many of the scenes, I have many friends who do vision defect detection in doing this, but this process is very difficult.

Because of this cause of defects or defect form too much. Now General curve detection by those programmes. A lot of people use some open source algorithms, such as OpenCV. Or even is some underlying Hoken library was developed on top of someone else’s vision to do the second. Such architecture are hardly a client to do something such as intelligence judgments or some of the accumulated experience. So it’s hard to be truly complete reliability of the defect is identified.

So the three encountered in the application layer should be our most urgent needs, and how do we solve that?

We cannot solve this problem, because we have really to do what kind of work is not in the Visual. But what we can do is:

For example, we get a customer demand, will be the first time suppliers to partner with us through a large number of tests to ensure the feasibility of the plan. We’ll set that could affect the stability and robustness of some disturbance. As the design of these programmes before the device test and this can provide a reference for future design of testing, a complete. Then in our design process, try not to touch these destabilizing factors. We try not to move the Visual scheme, for example, or if the customer really needs to change when we gave him some of our control as a way to guide customers to operate, to avoid the risk of those I have just mentioned.

On the ideal State, I am not very clear, the ideal State should be what is, we think it may be the one way vision also has a very long way. This section is how to improve the Visual product definition or development may be required to create a Visual person. Sit down with our automated people, good to sort out the problems we now face, and customer needs, and then a product planning and definition.

In addition, we are in fact privately tell company experienced technical personnel to discuss. We are wondering is it possible to use some-like creatures with this in mind. To help vision algorithms. Rather than saying as it is now, our every flaw, or modeling, describe each of these characteristics, calculation.

Q: how many robot manufacturers have no status of core technology?

First of all, I think the core technology is very important, if a company does not have core technology, and will eventually die.

You say this, I think there is no need too much panic, after all, it takes time for the core technology, and robot industry in China in the first stage. All companies need to take the time to own an industry position and a position in technical directions and route.

Simultaneously with this position, even want to do core technology, step by step it takes time to accumulate and Polish, and is not an overnight thing. So I think now we don’t care said the domestic manufacturers do not have the core technology of the past.

Another part of it right now is that many manufacturers will claim to have core technology. But the so-called core of the technology is not really about? Or that we now have the technology. We often say that the robot inside a few of the core technology: reducer, motors, controllers, whether the robots of the future really is. Or even if we get a number of fake foreign or maybe some discount these things called core, it is the core of really useful? I think that part of it was to be a test of time and market.

To solve this problem, I think, if everyone is concerned about the robots in this industry, I think there should be more concerned with domestic manufacturers which really want to know I just mentioned one of their trade orientation. And the future development of the road, or even one of their core technology.

After clear, ever, ever prepared, there are no plans, these actually are more important matters.

Practice is the best medicine

In this hard hit in the open class, Shi Jinbo-CV in the industrial robot is we focus on application of difference and service robots, and illustrates the industrial robot with his own, and men, and environmental interactions, the greatest significance lies in how to deal with the accident.

In the mentioned industrial robot highly flexible automation issues, her practical experience to show us, now Automation has done less than 100% of flexible automation level, so we called the flexibility must be carried out in a range of flexibility.

As a member of robot vision customers, in their own “weary” of experience to show you the current visual products for light is very very high, 2D Visual bid had great influence on the accuracy of the program finally is able to target, vision defect detection of a very bad situation.

This let past has been thought industrial robot is a silly Automation line of readers friends are on industrial robot has has again of awareness–industrial robot born is a platform level of things, it not like service robot as long as focused do each specific of products on good, its more is processing good robot and himself, and people, and environment interactive process encountered of various problem, to adapted constantly changes of production workshop needs.